Understanding the LSM-Tree Paper

This paper introduces the Log-Structured Merge-Tree, a disk-based data

structure tailored for environments with high rates of record insertions

(and deletions), such as transactions logs or history tables. It

contrasts LSM-Tree with traditional indexing methods like B-Tress, which

struggle under heavy write workloads due to excessive disk I/O. The

LSM-Tree aims to minimize this I/O cost by leveraging a combination of

memory and disk storage, batching operations, and optimizing for

sequantial writes

1. Summary of the Paper

The LSM-Tree is a write-optimized data structure desgined to :

-

Handle High Insert/Delete Rates Efficiently

: It batches and defers index updates, avoiding immediate disk writes

for every operation.

-

Use a Hybrid Structure

: It combines a memory-resident component (C₀ tree) with one or more

disk-resident components (C₁, C₂, etc.), allowing fast in-memory

writes and efficient disk management.

-

Reduce Disk I/O Costs

: Compared to B-Trees, it cuts down on random disk operations,

lowering the overall system cost (e.g, disk arm usage)

Context : The paper uses the TPC-A

benchmark (a transaction processing standard) as a case study. In TPC-A,

each transaction inserts a row into a History Table. Maintaining

real-time index (e.g by account ID) with a B-Tree I/O costs, while the

LSM-Tree reduces this significantly

2. Core Concepts

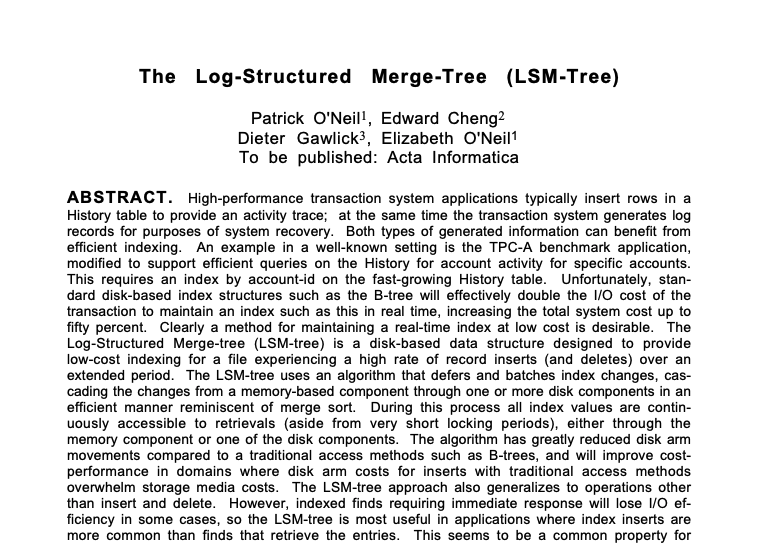

2.1 The Problem with Traditional Indexing (B-Trees)

-

High Insert Cost : B-Trees require

immediate updates to disk for each insert. For every new entry, a leaf

node must be read into memory (1 I/0), updated, and written back

(another I/O), totalling at least 2 I/0s per insert.

-

Random Disk Access : Since insertions

can occur anywhere in the tree (e.g random account in IDs in TPC-A),

these I/Os are random, causing disk arm movement (seek time and

rotational latency), which is slow and expensive

-

System Cost Impact : In high-throughput

systems (e.g., 1000 transactions per second), this doubles the disk

requirements, increasing costs by up to 50% (e.g., needing 50 extra

disk arms in the TPC-A example).

Analogy with the Paper : Imagine a

librarian (disk arm) updating a card catalog (B-Tree) by physically

walking to a random drawer for every new book added—inefficient and

time-consuming.

2.1 The Solution

-

Memory Buffering : New inserts go into

a memory-resident C₀ tree, which is fast (no I/O) and acts as a

temporary buffer.

-

Batched Disk Writes : When C₀ fills

up, its contents are merged with the disk-resident C₁ tree in batches,

using sequential writes instead of random ones.

-

Efficiency Gain : This reduces disk arm

movement, leveraging the cheaper cost of sequential I/O (e.g.,

multi-page block writes) over random I/O.

Analogy with the Paper : Instead of

updating the catalog for every book, the librarian writes new entries in

a notebook (C₀) and periodically transfers a sorted batch to the catalog

(C₁) in one go—faster and less effort.

3. Breaking down the LSM Tree Algorithm

While implementing this paper, I personally learnt a lot about tree data

structures and its types also used resources like this

[Link]

to know more indepth about B Trees and B+ Trees.

3.1 Two Component Model

- C₀ Tree (Memory-Resident)

-

> Purpose : Acts

as the entry point for all new inserts, keeping them in memory for

speed.

-

> Structure : Can

be any efficient in-memory (e.g., AVL or 2-3 tree), not tied to disk

page sizes

-

> Size Limit :

Constrained by memory capacity (e.g., 20 MB in one example), as

memory is expensive compared to disk.

-

> Recovery Note :

Since C₀ is in memory, its contents must be recoverable after a

crash (e.g., via transaction logs).

- C₁ Tree (Disk-Resident)

-

> Purpose : Stores

the bulk of the data long-term on disk, which is cheaper but slower.

-

> Structure :

Similar to a B-Tree but optimized for sequential access—nodes are

100% full and packed into multi-page blocks (e.g., 256 KB blocks).

-

> Access :

Frequently used nodes (e.g., directory nodes) may stay in memory

buffers, but leaf nodes typically require disk I/O.

-

Data Flow :

Inserts go to C₀ → C₀ merges into C₁ when full.

.png)

A schematic shows C₀ as a small, fast memory layer feeding into the

larger, slower C₁ disk layer. What do we get to know from this ?,

batching in C₀ avoids the immediate 2 I/O penalty of B-Trees, deferring

disk writes until a merge is needed.

3.2 The Rolling Merge Process

Trigger : When C₀ hits

a size threshold (e.g., near its memory limit), a "rolling merge"

begins.

Steps

-

Select a Segment : A

contiguous range of entries from C₀ (e.g., smallest key values) is

chosen.

-

Read from C₁ : A

multi-page block of C₁ leaf nodes is read into memory (e.g., 256 KB

containing multiple 4 KB pages).

-

Merge : C₀ entries

are combined with C₁ entries, keeping the result sorted.

-

Write Back : The

merged result is written to a new multi-page block on disk, not

overwriting the old one (for recovery purposes).

-

Repeat : The process

continues, moving through C₀ and C₁ like a "cursor," looping back to

the start after reaching the end.

Efficiency :

-

> Sequential I/O :

Multi-page block reads/writes (e.g., 64 pages in 125 ms vs. 20 ms per

random page) reduce seek and latency costs.

-

> Batching :

Multiple C₀ entries (e.g., 10 per C₁ page) are merged in one I/O

cycle, unlike B-Trees' one-entry-per-I/O model.

.png)

Depicts a cascade of components, showing how data flows from memory to

deeper disk levels. What do we get to know from this ?, multi-component

LSM-Trees scale efficiently by distributing data across multiple disk

layers, optimizing both memory and disk usage.

Intuition

I always go with phrases which can explain anything to anyone. No need

of prior context, I recommend to every other engineer to build this

habbit along with your learning phase of your life .

Remember you were a librarian a while back now you are managing a

massive, ever-growing catalog of books (like transaction logs or history

records) where new entries pour in constantly, but people rarely look

them up. A traditional catalog (like a B-Tree) forces you to walk to a

random shelf, pull out a card, update it, and put it back every time a

book arrives—slow and exhausting due to all the back-and-forth (random

disk I/O). The LSM-Tree is like a smarter system: you jot down new

entries in a small notebook (memory-based C₀ tree) that’s quick to use.

When the notebook fills up, you don’t update the main catalog

one-by-one; instead, you sort the batch and merge it into a big,

organized ledger (disk-based C₁ tree) in one smooth sweep (sequential

writes). For really huge libraries, you might add more ledgers (C₂, C₃,

etc.), passing data down in stages. This cuts down on frantic running

around (disk arm costs), saving time and effort (I/O overhead). Finding

a book (querying) takes a bit longer since you check the notebook and

ledgers, but that’s okay because adding books (inserts) is the priority.

In short, the LSM-Tree trades a little read speed for a lot of write

efficiency, perfect for systems drowning in new data but light on

lookups.

.png)

.png)